The Future of Personal Intelligence

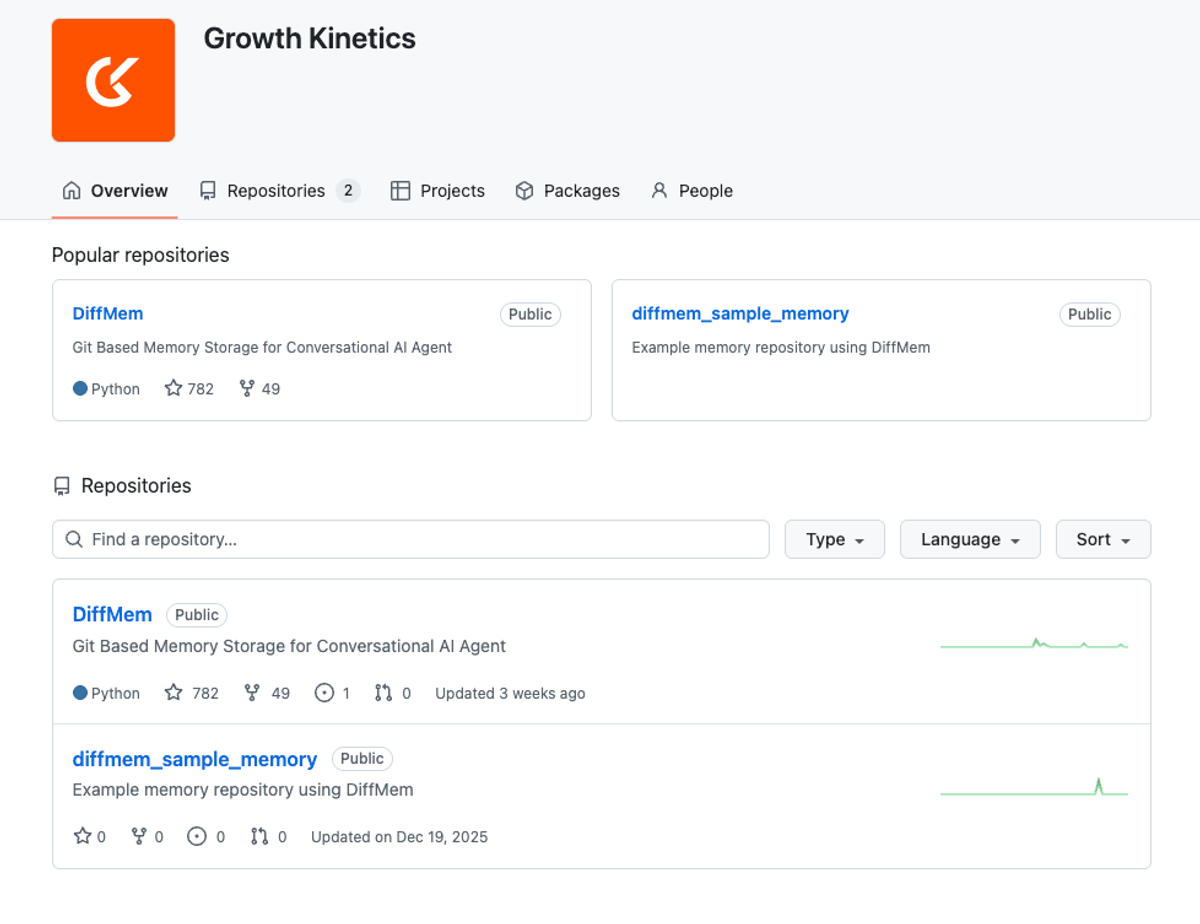

Growth Kinetics is challenging the industry’s “monolithic model” approach by developing a decentralised Orchestrator and Specialist architecture. Our R&D focuses on DiffMem (git-based differential memory), Identity Coherence as a performance metric, and Pure Intent Detection over rigid tool calling. This work is currently being stress-tested in the wild through our commercial proof-of-concept, Anna

The current trajectory of Artificial Intelligence is defined by a “bigger is better” philosophy—the pursuit of a monolithic “God Model” trained on the entirety of human knowledge.

“At Growth Kinetics, our R&D division challenges this orthodoxy. We believe the future of personal AI lies not in a single model that knows everything, but in a decentralised architecture of Trusted Delegation.”

We are currently training models, designing software architectures, and conducting research with two primary objectives: Differential Memory and Pure Intent Detection.

The Orchestrator Model

We envisage a future where the user interacts with a single, highly efficient Base Model. We call this the Orchestrator.

The Orchestrator’s primary function is not deep domain knowledge, but reasoning, relationship management, and intent recognition.

It is designed to know the user—their history, their goals, and their preferences.

When deep technical knowledge is required, the Orchestrator does not rely on hallucination or diluted generalist training data. Instead, it delegates.

DiffMem: Solving the Memory Problem

An Orchestrator cannot function without a coherent history. Current industry solutions for memory—typically vector database dumps—often result in “context sprawl,” where old, irrelevant data clutters the decision-making window.

To address this, we developed DiffMem (Git-Based Differential Memory).

DiffMem treats memory as a versioned repository rather than a static database.

By utilising differential logic (similar to code versioning), the system tracks the evolution of information over time.

This allows the agent to understand not just what is true in the present moment, but how facts and relationships have changed.

This is essential for solving the problem of long-term, wide-and-deep memory retention without degrading performance.

Identity Coherence as a Performance Metric

A significant portion of our research focuses on Identity Coherence. In the broader AI industry, “personality” is often dismissed as a cosmetic feature.

Our findings suggest that a defined personality is actually a critical performance metric. A “vanilla” model, lacking a stable self, often suffers from inconsistent decision-making.

We argue that Identity Coherence creates a stable framework for the agent. It allows the model to align with the user’s specific biases and expectations, fostering a sense of trust.

When an agent maintains a consistent psychological profile, the user’s perception of quality and the effectiveness of the collaboration increases.

From this viewpoint, we are actively researching how to quantify this “subjective quality” and its impact on long-term retention.

Critique of Tool Calling & The Return to Intent

We hold a contrarian view regarding the industry standard of “Tool Calling” (e.g., embedded schemas used by major providers). We believe that embedding rigid tool schemas directly into LLM pre-training is an inefficient use of compute and model layers.

It reduces a reasoning engine to a formatting engine. Furthermore, it creates a dependency on specific I/O structures that limits flexibility.

Our research advocates for Pure Intent Detection. We are working on fine-tuning Instruct Models that are designed to implicitly understand the goal behind a user’s prompt.

The objective is for the agent to deduce the necessary action based on context and memory, rather than simply filling slots in a pre-defined JSON schema.

Commercial Proof of Concept: Annabelle

Our theories are tested in the wild through Annabelle (WithAnna.io).

“Annabelle serves as the commercial proof-of-concept for the Orchestrator architecture and DiffMem implementation. Unlike enterprise chatbots constrained by rigid workflows, Annabelle is designed as a “Listener.” She operates in a highly complex, unstructured environment—human conversation.”

Annabelle allows us to test our hypotheses on long-term memory retention, identity coherence, and the ability of an agent to maintain a continuous narrative thread over months of interaction.

Future Roadmap

We are currently designing the architecture for the next generation of specialised base models and fine-tunes.

To fully realise the vision of the Orchestrator architecture and the Skills Marketplace, we are seeking collaboration with compute partners who share our view that the future of AI is not larger models, but smarter, more specialised agents.